Startups vs. Enterprises: The AI Adoption Gap

Part 1: Why enterprises struggle where startups succeed

"When people are anxious about job security, layoffs, and the constant push for productivity, they're not in a mindset to learn. We first need to address those anxieties directly—only then can people feel curious and open to exploring AI." – Helen Kupp, co-founder of Women Defining AI

Part one of two columns that tap insights from Helen and a range of executives. Check out Helen’s new course on AI for Business Leaders.

Helen Kupp, co-founder Women Defining AI

Enterprises Struggle where Startups Succeed

"God built the world in seven days, but he didn't have an installed base."

This old tech adage perfectly captures why startups adopt transformative technologies while enterprises get left in the dust. I've watched this play out across dozens of organizations: nimble startups integrate generative AI with relative ease, while resource-rich enterprises struggle through molasses-slow adoption cycles.

At a recent BCG executive forum, I joined tech leaders and VCs to discuss this digital divide. The consensus? While integrating new technologies into large-scale enterprises is hard, that’s just a matter of time. The bigger challenge isn’t about systems or technology—it's about people.

Hype vs your Installed Base

I've worked with startups that are seeing dramatic leverage from genAI tools. A year ago, that meant hiring 20-30% fewer engineers because teams were equally productive with fewer people. Today, some tell me they are doing the work of a 10-person team with just a handful of folks.

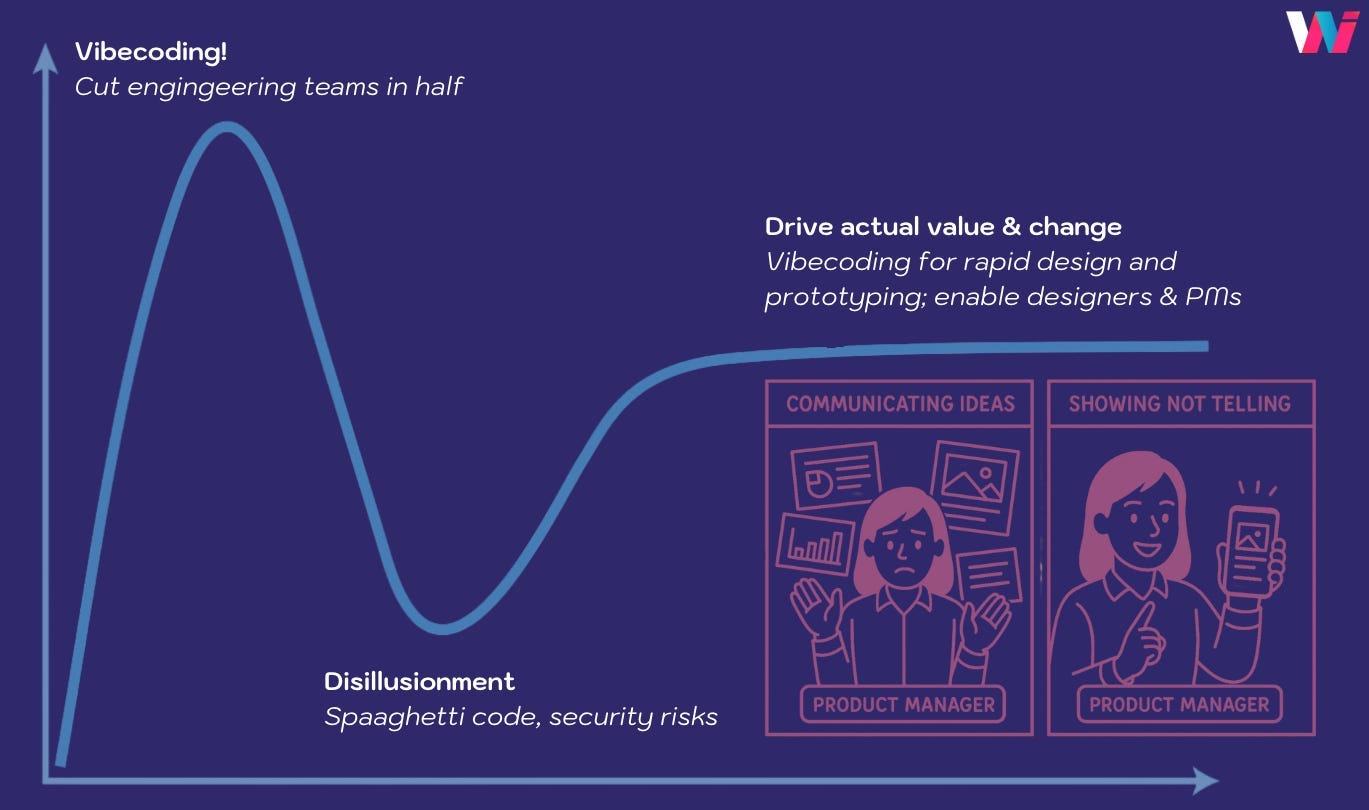

But that's at the early stage: you're building prototypes and initial products with a small, flexible base. You can vibe-code a prototype and rapidly build towards an MVP. What you can't do is vibe-code your way through 11 million lines of code, thousands of special cases, and regulatory compliance. At least, not yet.

The challenge? Executives who swallow the hype without understanding the reality. Helen Kupp, who led a session with our Work Forward Forum last week, described a common scenario: "For the most part, a lot of what I'm hearing on the ground is 'My CEO mandates AI usage and everything... But they have no idea how bad it can be in certain instances.'"

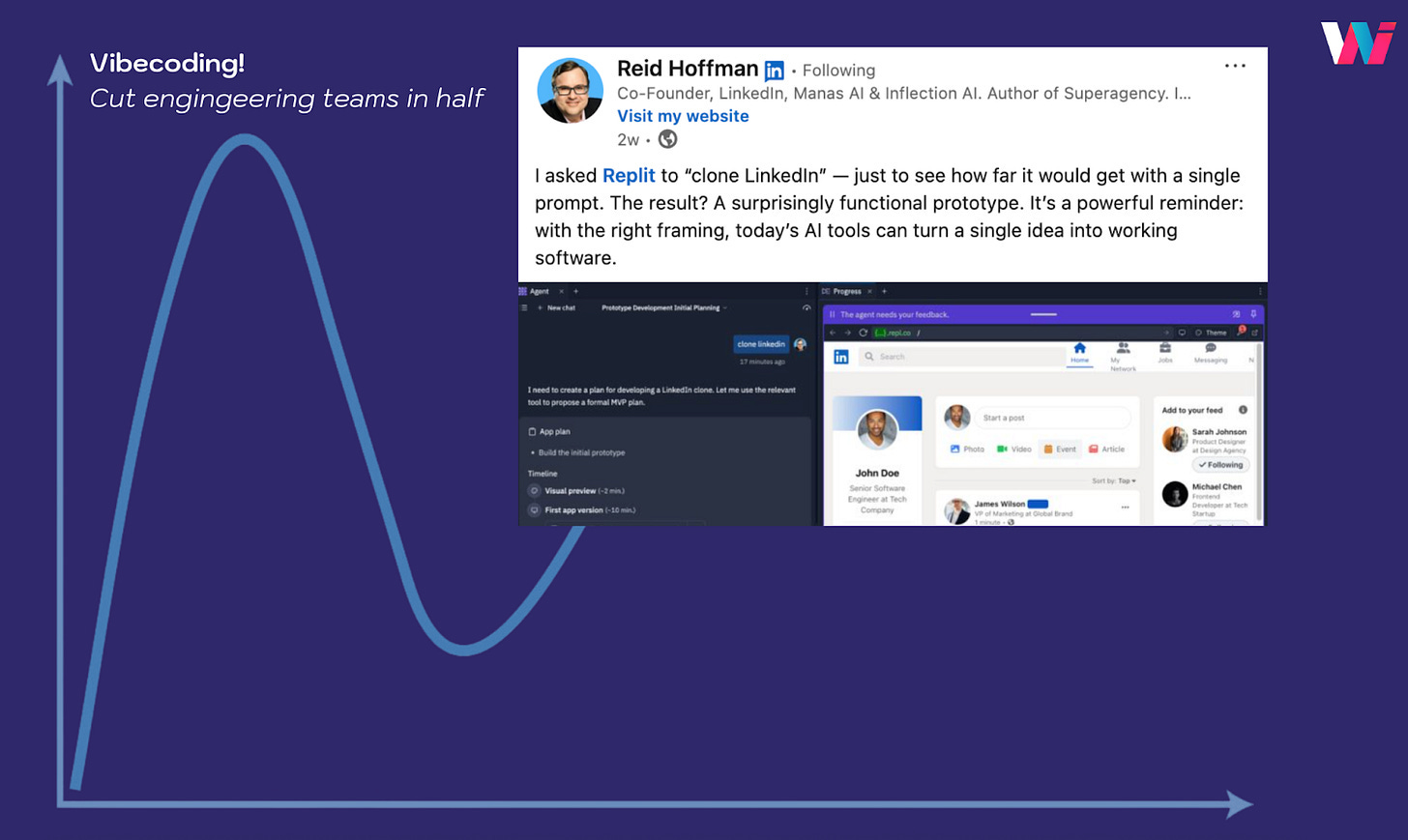

We discussed Reid Hoffman's LinkedIn vibecoding post and how it generates a too-common executive reaction: "Great, we can cut engineering in half!"

But as Helen pointed out, "If at the very top, CEOs are saying 'it will do all the things'—that's not realistic. And people on the ground who are using the tools every day know that that's not true."

I hear this constantly from developers to VPs of Engineering. The complexity of a successful business means these tools might be helpful, but the benefits aren't as widely seen nor as deep as the hype suggests.

The Real Obstacle to AI Adoption: Fear

This disconnect breeds resistance, not enthusiasm. Twilio's Noel McNulty made a brilliant observation during our discussion: "We need to slow down to speed up, meaning that a lot of learning is mired in competency and experience."

The rush-to-adopt approach creates precisely the wrong conditions for learning. When employees fear looking incompetent or worry about their jobs, they can't experiment, fail, and grow.

According to Slack research, 47% of workers hide their AI use out of fear of being seen as cheating or incompetent. That surprised the tech leaders at BCG’s event, but it shouldn't have—too many large organizations run on appearances rather than performance.

The paradox is clear: to accelerate AI adoption, you need to create an environment where people feel safe to learn and experiment.

Here’s where Helen’s intro quote comes in — context of 2025 matters: "When people are anxious about job security, layoffs, and the constant push for productivity, they're not in a mindset to learn. We first need to address those anxieties directly—only then can people feel curious and open to exploring AI."

For enterprises with complex systems and large teams, this emotional work matters more than the technical integration. While startups can pivot quickly, enterprises need to navigate the complex emotions of thousands of employees who legitimately fear that automation means replacement.

Executive Buy-In: Walking the Talk

This disconnect breeds resistance, not enthusiasm. Twilio’s Noel McNulty made a brilliant observation during our discussion: "It's that we need to slow down to speed up, meaning that a lot of learning is mired in competency and experience."

The rush-to-adopt approach creates precisely the wrong conditions for learning. When employees fear looking incompetent or worry about their jobs, they can't experiment, fail, and grow.

The most critical factor? Leaders must demonstrate, not just dictate. Helen was emphatic: "It is so important for executives to show that they're getting hands-on and sharing examples of what they're doing."

She shared a story about a CTO who initially mandated AI coding tools but then agreed to run a pilot with his best engineers. When they provided detailed feedback about the tool's limitations, "he said, 'okay, so I'm going to step back a little bit on the idea that everyone should vibe code and do all this at once.'" Real learning comes from real experience.

Think Different About AI's Value

Here's the bigger revelation about generative AI: its greatest value isn't speeding up experts, but giving superpowers to those without specialized skills.

Helen put it perfectly: "The best leaders that I've talked to, the companies that are thinking about it differently, are not thinking about replacement. They're thinking about how this actually transforms other roles that couldn't do these things before."

Instead of thinking about how vibecoding accelerates engineers, focus on its transformative impact for product managers and designers. Suddenly they can jump straight to rapid prototyping—even testing with customers for feedback—before developing formal requirements docs. This fundamentally changes workflows, not just accelerates them.

At a recent Accel event, Dennis Cui, VP Engineering at Decagon, highlighted this shift: "We're much faster from concept to prototype, putting something in the customer's hands. That's different."

Nah, Just Pull out the Sticks

Rather than face the deeper emotional work needed, some Big Tech leaders have just changed tactics: raising expectations on employees, especially engineers. Pulling out the sticks might work, on some level. It's the equivalent of Sergey Brin saying Google teams need to put in at least 60 hours a week in the office if they want to win—not exactly inspirational leadership.

The risk? AI is accelerating the Innovator's Dilemma. The challengers coming at incumbents can now build more, faster, with fewer resources. But it's not just that they don't have the installed base issue—it's that they're motivated. People are driven by purpose, their roles are clear, and they have a sense of team.

Team-Centered AI: What Actually Works

I have, at this point, left you hanging – too much here’s why it’s hard and too little here’s how to make it work.

In part two, I'll share more from Helen and other leaders on the team-centered strategies that are actually working for organizations that have overcome these emotional barriers, along with practical ways to measure your progress.

What's your organization doing to address the emotional side of AI adoption?

Related Reading:

Reskilling for the Age of AI, Lessons from Udemy and Zapier

Helen’s Course:

No, really: check out Helen's AI for Business Leaders course. It's brand-new, but based on courses I've taken with her and her work with thousands of people and dozens of leaders. At a minimum, make sure you check out the course preview video, it's awesome!

1 On Sergey’s 60 Hours in the Office…

Four hour “no meetings” blocks at Google would unlock far more productivity than Sergey’s “at least 60 hours a week in office” request. Trust Chris DiBona, who ran developer infrastructure for Android.

He built an afternoon “no meetings” rule for his team and defended them from intruders — and got plenty of outside complaints. But he did it because it made his team effective.

More is not better, in this case:

“The best developers know that once they’ve been working singularly on a problem for 4, 5, 6, 8 hours in a day, their accuracy and productivity drop into the toilet and they’ll spend more time fixing their stress induced screwups than creating good code.”

Chris also tears into the rest of what’s wrong with Sergey’s hot take – all of which resonates based on my experience there.

Great post - I was wondering why so many CEOs seem to be so tone deaf about the impact of AI on jobs, seemingly only thinking of the investor community rather than their employees! This is a great change of perspective!

This is my biggest fear, Brian. That leaders are going to see AI as just another way to squeeze even more out of employees. Engagement is already at record lows, and instead of addressing the human side of work, we’re seeing mandates to return to the office, pressure to constantly upskill, and the removal of repetitive tasks that, frankly, can sometimes be a mental break when you’re running on fumes.

If AI just becomes another productivity hammer, workplaces are going to get worse, not better.