Avoiding Workslop

When AI Makes Work Worse, Not Better

Heavy artificial intelligence users know the technology’s transformative potential. As a writer, I find it an indispensable partner that challenges my thinking, surfaces new insights, and serves as a decent copy editor. As Jonathan Raymond puts it recently in Charter, genAI tools let you “write drunk, edit sober” — capturing ideas freely, then refining them rigorously.

When used thoughtfully, AI demonstrably improves work quality. Microsoft’s team of senior data scientists and engineers dramatically decreased prototype delivery time while increasing quality. But there’s a darker side emerging.

The Workslop Problem

I’ve been on the receiving end of what BetterUp researchers call “workslop”: AI-generated content that looks polished but lacks substance. The term, from their Harvard Business Review article, describes output that masquerades as good work but fails to meaningfully advance tasks.

The horror stories are multiplying. Two examples shared with me this week:

Managing up: A colleague who “very clearly puts any question into ChatGPT and pastes the response without reading it” is “loathed by everyone else on the team, but our boss loves him because he answers quickly — even though he’s often wrong and we have to clean it up.”

Managing down: A CFO who takes marketing presentations, feeds campaign highlights into ChatGPT, and sends back “better ideas” — often lacking crucial context about target customers or market realities.

The costs are staggering. BetterUp research found that 40% of desk workers received workslop last month, with each incident taking an average of two hours to resolve. That translates to $186 monthly per employee — or $9 million annually for a 10,000-person company.

That puts workslop in the same cost category as major enterprise software failures or cybersecurity incidents — except it’s entirely self-inflicted.

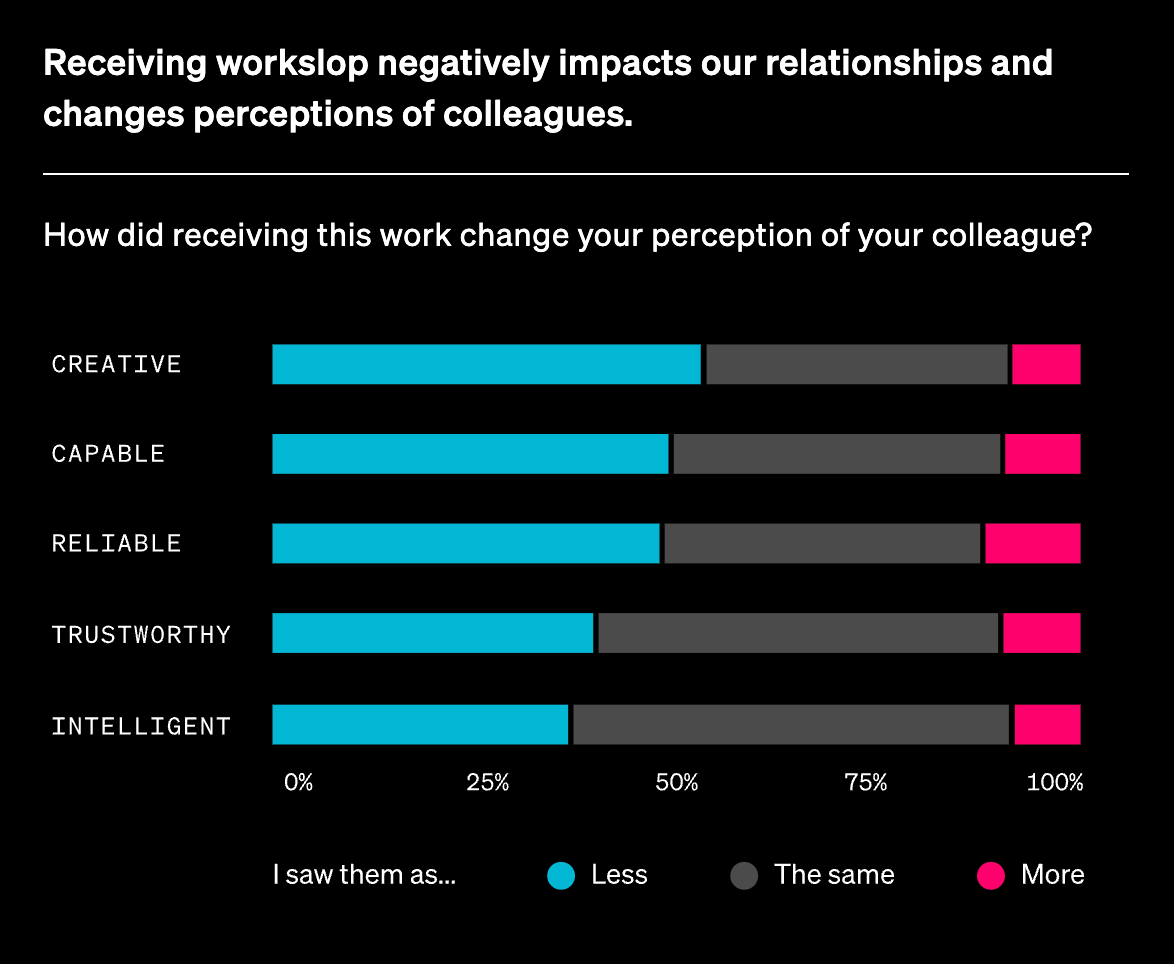

But the financial toll is only part of the damage. Recipients bear the burden of assessing quality and veracity, often doing more work than if they’d started from scratch. Meanwhile, creators damage their reputations as teammates perceive them as less creative, capable, and reliable.

BetterUp, September 2025

Three Strategies to Stop Workslop

1. Establish clear team norms. Have explicit conversations about acceptable AI use — generating ideas, editing communications, research assistance — and expectations. Make it clear that AI-assisted output remains each person’s responsibility. Beyond preventing workslop, these discussions reduce AI anxiety and increase thoughtful adoption. For a great guide, check out Charter’s best practices.

2. Create structured learning forums. Duolingo’s “FrAIdays” dedicates regular time to AI experimentation, with learning captains for each function who structure sessions and share what works. Other organizations use Slack channels for ongoing feedback and best practices. The goal: develop collective wisdom about quality AI use.

3. Lead by example. Leadership support doubles AI adoption rates, but leaders need to model quality over speed. When executives use AI thoughtfully—showing their work, acknowledging limitations, maintaining human judgment—they set organizational standards for what’s acceptable.

The Bigger Picture

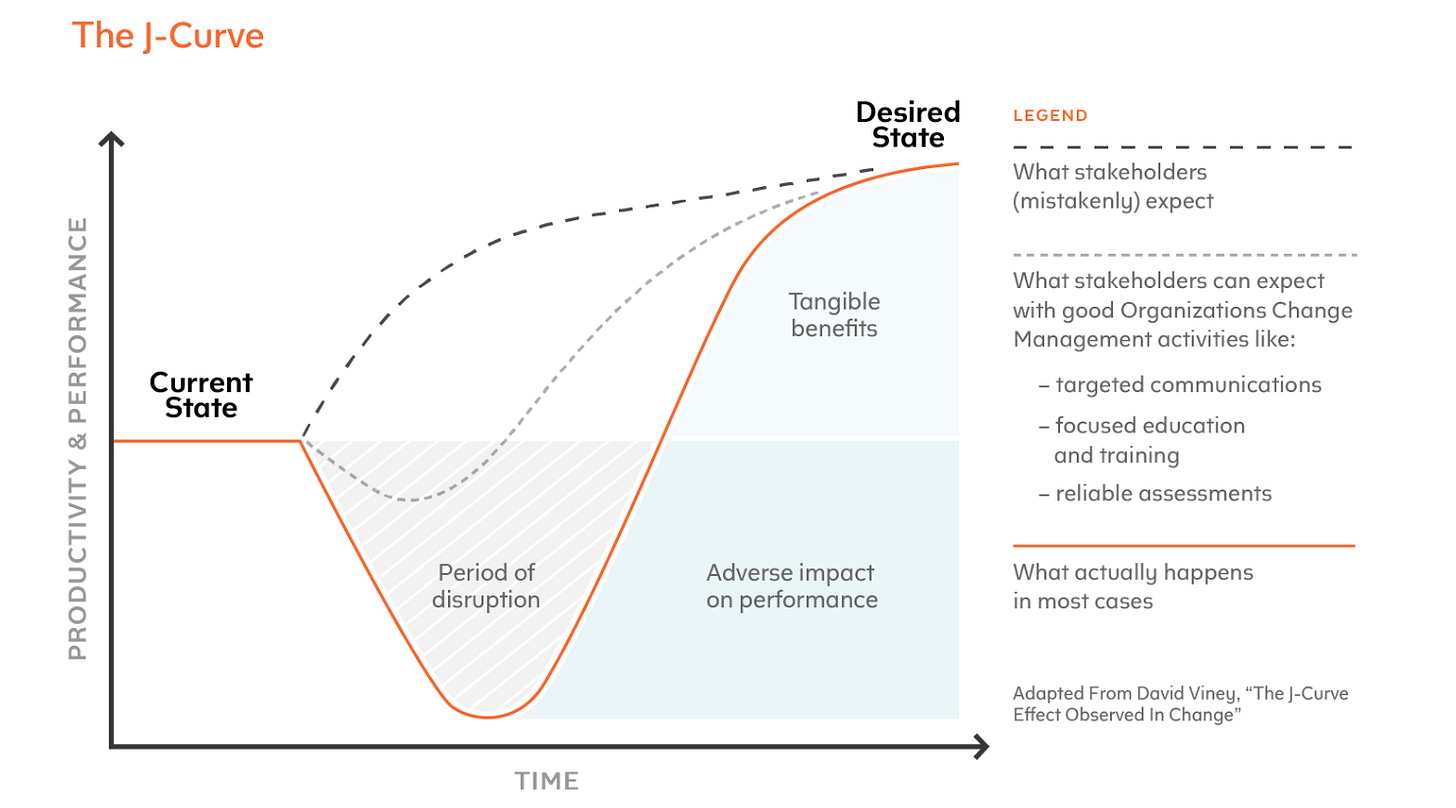

AI adoption follows a predictable J-curve: short-term productivity dips while people learn, followed by meaningful gains.

But workslop shortcuts this learning process, delivering neither the initial dip nor the eventual gains — just perpetual mediocrity.

The solution isn’t avoiding AI but using it wisely. As organizations invest billions in AI tools, the real competitive advantage will come from developing cultures where technology amplifies human judgment rather than replacing it.

The choice is stark: build teams that use AI to think better, or watch them use AI to avoid thinking at all.

What’s your favorite tip for reducing workslop?